Medical Segmentation

Research project on medical segmenetation using U-Net

Convolutional Neural Networks (CNNs) have been extensively used in critical sectors such as healthcare for more effciient diagnosis of diseases like tumors in medical imaging. However, structures in medical images are often complicated and comes in various shapes and forms. These complexities have contributed to the difficulty for tasks like binary segmentation of tumors (foreground) from irrevlant pixels (background). Issues such as boundary blurring between the background and foreground have been a limiting factor in more reliance on such technologies.

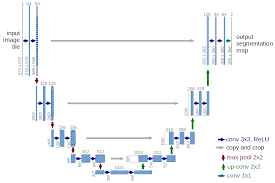

One of the architectures currently used in fields such as medical imaging is the U-Net, which is a encoder decoder network desgined specifically for segmentation tasks.

Each of the layer in the U-Net are composed of two main operation, namely convolution and max-pooling. In the context of the U-Net, the convolution layer contains a learnable parameter represented by the rectangular kernel to extract patterns from the feature map inputted into each layer. The max-pooling down sample the feature map by selecting most signficant value across spatial and channel dimension. Together, the stacking of these layers gives U-Net it’s strong reprsentative learning ability for medical images.

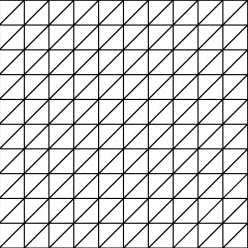

However, the operations are all confined by its geometry, specifically it only operates in a retangular manner (i.e. convolution kernel and pooling). This project seeks to explore the use of graph-based methods such as meshes to tackle the binary segmenetation task for medical images. Instead, the image is reprsented by a triangular mesh with each pixel representing a node and each node is only connected to its immediate neighbors and lower left node.

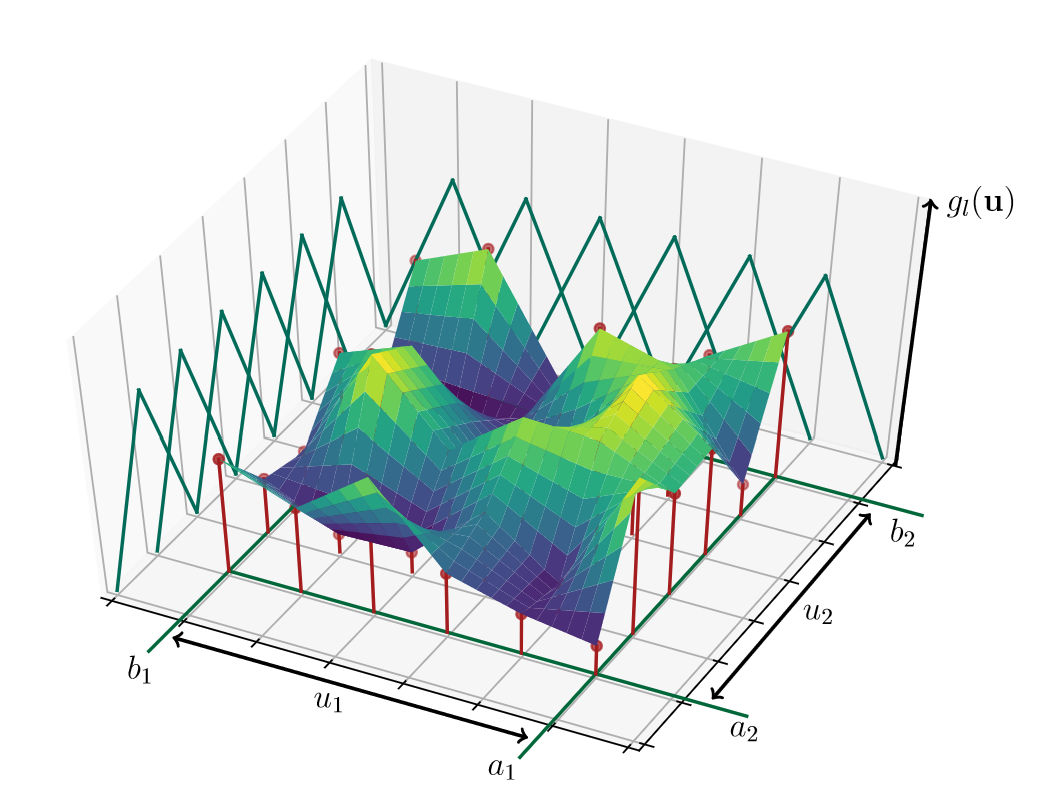

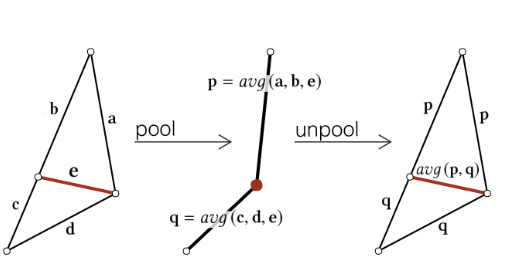

With this representation, the traditional convolution and pooling will not be compatible. For the convolution, recent results in spline convolution made it an ideal choice as the learnable parameter reprsenting B-Splines (shown in Figure 3). Inspired by MeshCNN, we adapted their mesh pooling scheme, which merges 2 faces into 2 edges without violating the mesh property, to replace the max pooling (shown in Figure 4).

Experimentation on this implementation showed that the Hybrid U-Net that we proposed is almost on par with the baseline. However, due to the complexity of meshes, the efficiency of the implementation (specifically mesh pooling) needed to be reinterpreted and overhauled as it takes much longer to train than the traditional U-Net implementaiton which uses operations that are vectorized.

You can check out the implementation at

---

https://github.com/chengq220/Hybrid_UNet

---